Preface

Before using the Theoretical high-performance computing (HPC) cluster, you will need to obtain access by filling out the Theoretical Cluster Request Form.

Before using the Theoretical high-performance computing (HPC) cluster, you will need to obtain access by filling out the Theoretical Cluster Request Form.

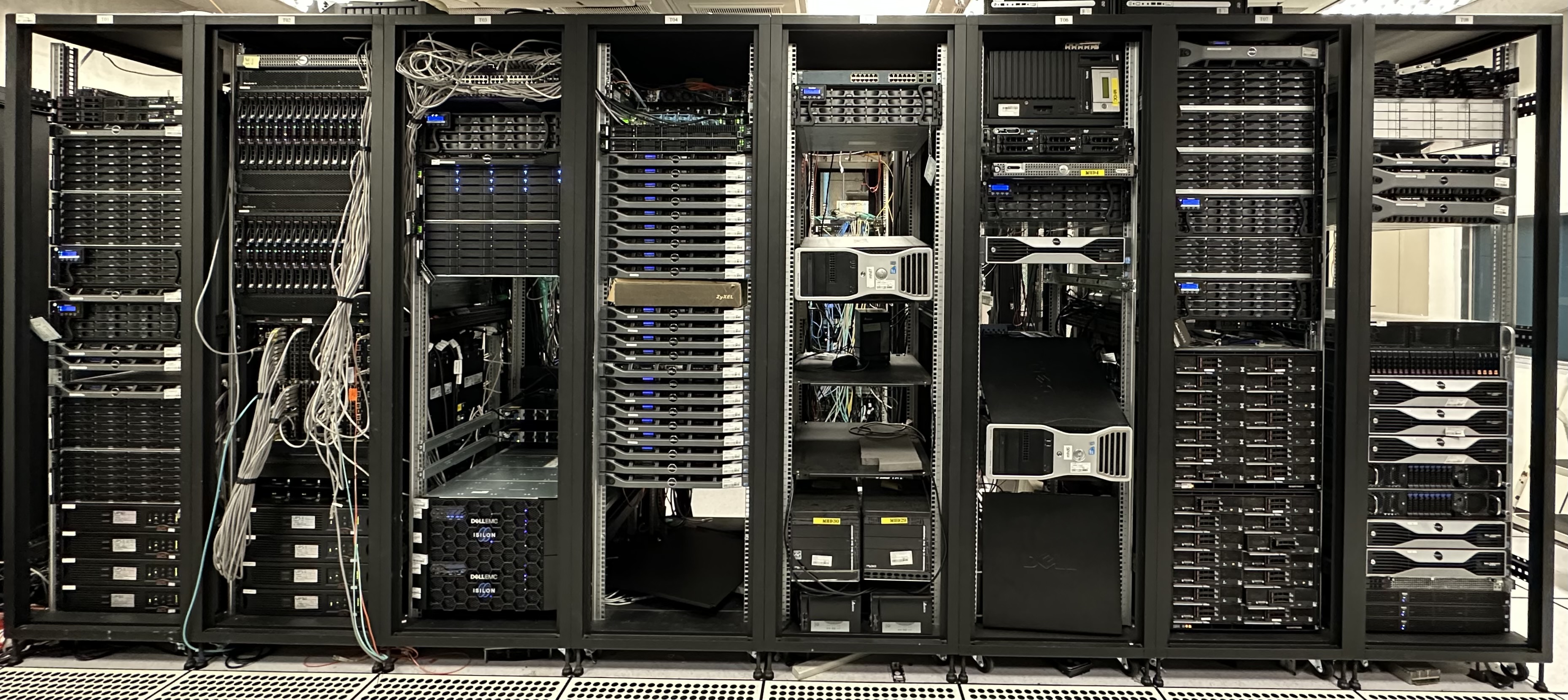

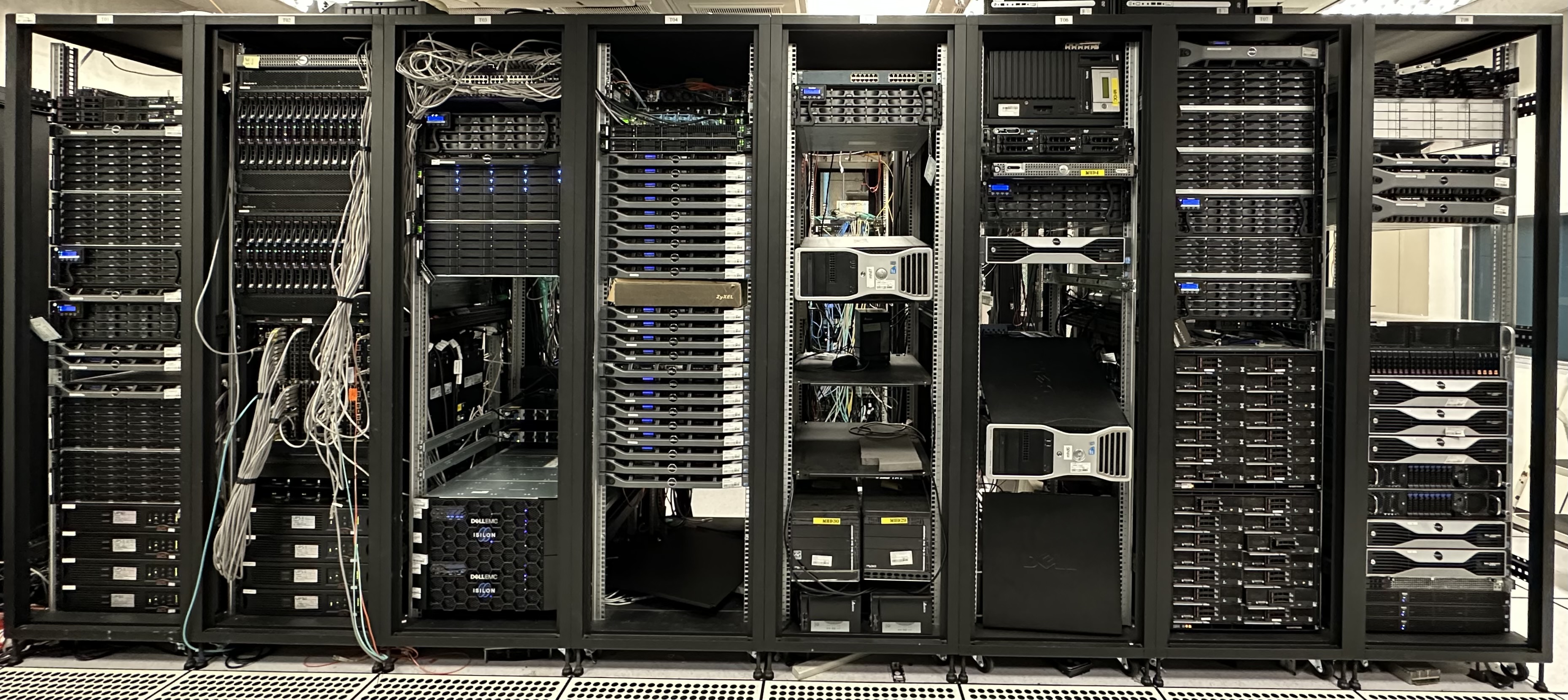

The HPC Cluster servers consist of few head nodes and many compute nodes ("servers"). There are queuing systems with access to separate "partitions" for portions of hardware that contain different hardware generations. In the same partition they can work together as a single "supercomputer", depending on the number of CPUs you specify.

| Cluster | Cores | Nodes | CPU per node | Memory per node | Network | Year |

|---|---|---|---|---|---|---|

| xl | 1,664 | 103 | INTEL E5-2640 v3 @ 2.60GHz x 2 (16 Cores x 99), INTEL E5-2680 v3 @ 2.50GHz x 2 (24 Cores x 4) | 64GB, 256GB, 512GB | 10Gb Ethernet / 56Gb IB FDR | 2015, 2016 |

| kawas | 2,048 | 16 | AMD EPYC 7763 @ 2.45GHz x 2 (128 Cores) | 512GB | 25Gb Ethernet / 200Gb IB HDR | 2022 |

| Hostname | Node | CPU per node | GPU per node | Memory | Network | Year |

|---|---|---|---|---|---|---|

| gp8~11 | 4 | INTEL E5-2698 v4 @ 2.20GHz x 2 (40 Cores) | NVIDIA Tesla P100-SXM2 (3,584 FP32 CUDA Cores, 16GB) x 4 | 256GB | 10Gb Ethernet | 2016,2017 |

| kawas17~18 | 2 | AMD EPYC 7643 @ 2.30GHz x 2 (96 Cores) | NVIDIA A100-SXM4-80GB (6,912 FP32 CUDA Cores, 80GB) x 8 | 1024GB | 25Gb Ethernet / 200Gb IB HDR | 2023 |

| Hostname | CPU per node | GPU per node | Memory | Network | Year |

|---|---|---|---|---|---|

| tumaz | INTEL E5-2620 v4 @ 2.10GHz x 2 (16 Cores) | NVIDIA TITAN X Pascal (3,584 FP32 CUDA Cores, 12GB) x 8 | 1.5TB | 10Gb Ethernet | 2018 |

| ngabul | INTEL Gold 5218 CPU @ 2.30GHz x 2 (32 Cores) | NVIDIA Quadro RTX 8000 (4,608 FP32 CUDA Cores, 48GB) x 8 | 1.5TB | 10Gb Ethernet | 2019 |

| kolong | AMD EPYC 7713 @ 2.00GHz x 2 (128 Cores) | NVIDIA RTX A6000 (10,752 FP32 CUDA Cores, 48GB) x 8 | 2.0TB | 10Gb Ethernet | 2021 |

In order to login head nodes, you need to login the gate server (gate.tiara.sinica.edu.tw), first. The Academia Sinica Institute of Astronomy and Astrophysics (ASIAA) networks are allowed to login, directly. When you are outside of the office, you could connect to the gate server via VPN or visit our internal page and add your current IP address into the gate's white list.

Data space (/tiara/ara/data) in the HPC file system is not backed-up and should be treated as temporary by users. Only files necessary for actively-running jobs should be kept on the file system, and files should be removed from the cluster when jobs complete. A copy of any essential files should be kept in an alternate, non-TIARA storage location.

Each TIARA user is initially allocated 500GB of data storage space in their home directory (/tiara/home/username/), though we can increase data quotas upon email request to sysman@tiara.sinica.edu.tw with a description of data space needing for concurrent, active work. Each non-TIARA user is initially allocated 100 GB of data storage space in their home directory.

TIARA System Staff reserve the right to remove any significant amounts of data on the HPC Cluster in our efforts to maintain filesystem performance for all users, though we will always first ask users to remove excess data and minimize file counts before taking additional action.

Scratch space of is available on each execute node in /scratch/data and is automatically cleaned out upon 45 days.

more details please reference Storage Usage Policy.

The job scheduler on the HPC Cluster is using the PBS Pro. You can read more about submitting jobs to the queue on the PBS User Guide, but we have provided a simple guide in our wordpress page for getting started.

The XL Cluster partition is good for running small, medium or serial jobs.

The Kawas Cluster partition is best for running production and large jobs.